Researchers one step closer to mitigating quantum computing errors

Wednesday, April 24, 2019

Quantum computers have developed rapidly in the last decade, bringing their disruptive capabilities closer to market. By harnessing the unique features of the quantum world, such as quantum superposition, it could provide unprecedented computational power and help solve some of society’s greatest challenges. By directly encoding information into quantum many-body states, a small number of quantum bits — or qubits — can replace an exponential number of classical bits. This quantum-enhanced computational capability could then be used, for example, to reduce our impact on the climate through more energy-efficient materials and to design more effective drugs to cure diseases.

In order to achieve such ambitious goals however, quantum computers will need to reduce the errors that occur during operation. Conventional computing users never need to be concerned about errors (except maybe for human errors, such as software bugs) because the performance of the central processing unit is so robust. A classical computation unfolds in a predictable way: it is a deterministic process that starts from point A and finishes at point B through a set of instructions. A quantum computation, on the other hand, is prone to errors due to fluctuations in the environment of the qubits. To compute the correct output (or to reach point B), constant monitoring of these effects and appropriate corrective actions are required.

Quantum computing is prone to errors mainly because of two distinct mechanisms: decoherence and the limited accuracy of control. Decoherence is due to the fact that quantum coherent

superpositions are inherently sensitive to their environment, and are affected by defects in the surrounding material. Limitations to control accuracy, on the other hand, are the result of the fact that quantum computation is fundamentally analogue (with gate operations that are fundamentally continuous rather than discrete) and thus directly affected by the finite precision of control during gate operations.

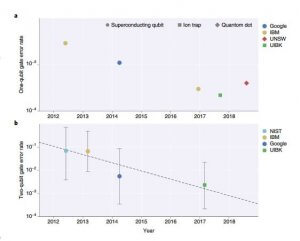

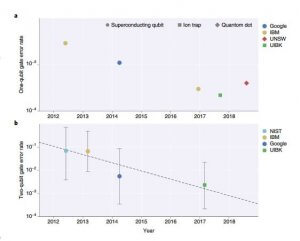

Figure 1. Pace of development of quantum computing

Ultimately, these errors limit the length of the quantum computations that can be performed reliably on the hardware. Reducing them remains a major challenge across all of the different hardware approaches to quantum computing. Writing in Nature Electronics, Henry Yang and colleagues now report an approach to reducing error rates in qubits made from silicon metal–oxide–semiconductor (SiMOS) quantum dots.

The researchers — who are based atthe University of New South Wales, theUniversity of Sydney and Keio University —assessed the strength and type of the dominant errors limiting the performance of single-qubit gate operations. Then, usinga family of randomized benchmarking

protocols, they explored how these errors could be mitigated through pulse engineering techniques — the optimization of control signals to tackle fluctuations in the energy spectrum of the qubit. Through this iterative design process, the researchers achieved very-high-quality gate operations (Clifford gate fidelities for a single qubit of 99.957%) that are comparable to otherleading candidate technologies for quantum computing (Fig.1).

Yang and colleagues applied randomized benchmarking — a standard method to identify the net error rate for basic qubit operations— combined with a new method called unitarity benchmarking. This approach allowed them to assess the relative impact of the two types of errors (decoherent or control errors) and to implement different pulse design strategies. Designing the control pulses to address the specific types of noise led to a dramatic improvement in the quality of gate operations, and furthermore, provided an

understanding of how much improvement is possible through pulse engineering alone.

The work of Yang and colleagues is another important step on the path to practical quantum computing. But how many steps remain? And where do the leading hardware approaches to quantum computing currently stand? To answer these questions requires a multi-dimensional answer, with many trade-offs and some unknowns. Some important challenges include the assessment and reduction of system errors that originate, for example, from cross-talk between qubits and correlated errors across clock cycles, as well as the evaluation of the scaling of error rates as the number of qubits in the architecture scales up. Possibly the most critical current challenge is reducing the error rates for two-qubit entangling operations, which are required to achieve useful large-scale quantum computation. The leading results for this type of entangling operation over the past several years suggest that, in terms of error rates, superconducting qubits and ion traps have leap-frogged each other as record holders. Notably, the trend here is a roughly exponential pace of improvement of two-qubit gate operations, with a tenfold improvement in the performance of entangling gates every 2.8 years. This exponential pace suggests exciting prospects for quantum computing in the not-too-distant future.